DANCE Platform version 2

In this webpage you can find instructions necessary to download, install and run the DANCE software platform Version 2. The platform is based on EyesWeb XMI (http://www.infomus.org/eyesweb_eng.php), allowing users to perform synchronized recording, playback, and analysis of a multimodal stream of data. Details about the platform architecture and data stream formats are provided in Deliverable 4.2.

Download and install EyesWeb XMI

The updated version of EyesWeb XMI can be downloaded here: ftp://ftp.infomus.org/Evaluate/EyesWeb/XMI/Version_5.7.x/EyesWeb_XMI_setup_5.7.0.0.exe

Instructions to download and install EyesWeb XMI can be found on the DANCE software platform version 1 page.

2. Download the DANCE example tools and patches

The DANCE example tools and patches are programs, written to be loaded and executed in EyesWeb XMI, that allow the user to record, playback and analyze multimodal data (video, audio, motion capture, sensors). To run tools you will need to download the corresponding installers, launch them and execute the tools as normal Windows applications. To run patches you will need to download and load them into the EyesWeb application (see step 1 on how to download and install EyesWeb). The current version of the DANCE example tools and patches includes applications allowing you to perform different tasks:

a) Tools and patches for recording and playing back multimodal data

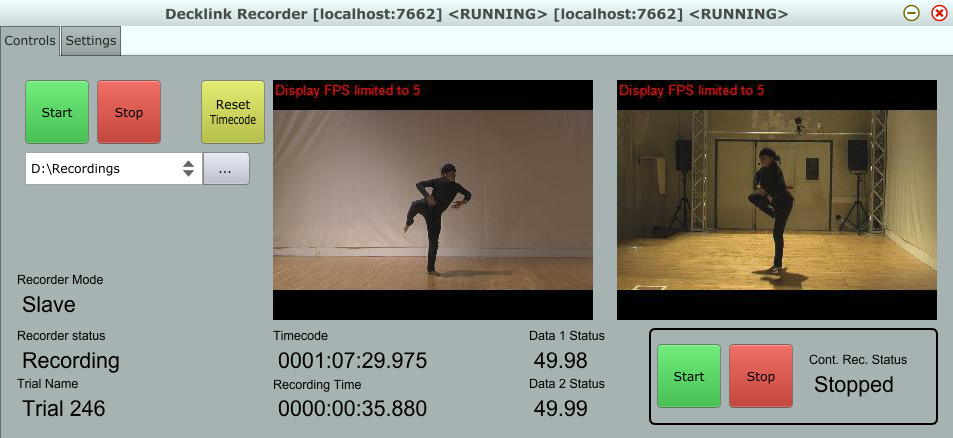

Video recorder tool (download installer) [Warning: a Decklink frame grabber is required to roon this tool]

This module, depicted below, is able to record the video stream of multiple video sources. Each video source is stored in a separate video+audio (e.g., AVI, mp4). To guarantee synchronization with the other data streams, the audio channels of the generated files contain the SMPTE time signal encoded as audio. During playback, the SMPTE is decoded from audio to extract timing information and play the video stream in sync.

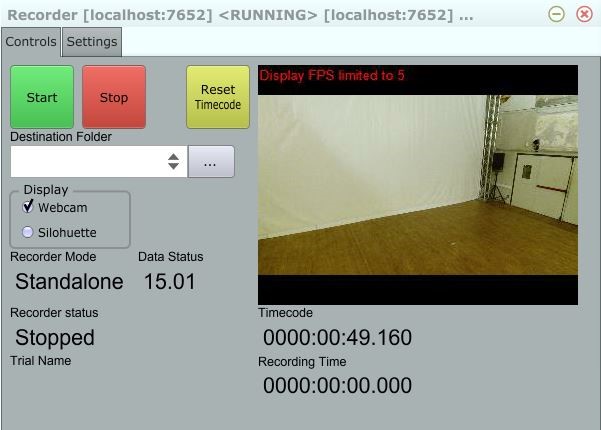

Kinect recorder tool (download installer) [Warning: a Kinect 2 is needed to run the tool]

The Kinect recorder tool is depicted below:

The tool shows the current framerate in the "Data Status" field (15.01 frames per second in the example), the name used for this trial (trial_000, progressive numbers are automatically assigned to each trial), and the value of the reference clock (HHHH:MM:SS.mmm; 0000:00:49.160 in the above picture).

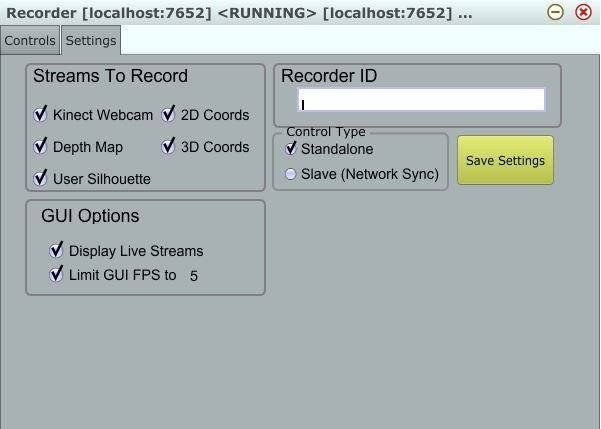

The option panel, displayed below, has been redesigned in the new version. It allows to choose which Kinect streams to record, the recording mode (standalone or slave) and to assign an ID to the recording module. The ID will be reported in the master interface, as described in the previous section.

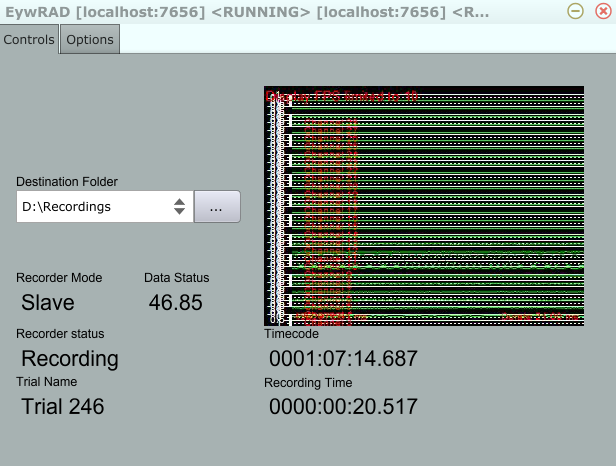

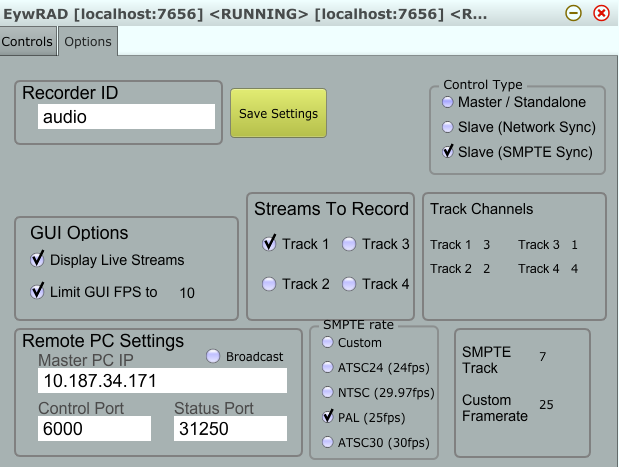

Audio recorder tool (download installer) [Warning: an ASIO compatible sound card is needed to run the tool]

The audio recorder tool is depicted in the below:

It has been improved from the previous version by adding the possibility of recording several stereo audio tracks at the same time. The recorder is currently limited, mainly for performance reasons, to 8 stereo tracks, that is, 16 mono channels. The recorder interface shows the input audio signals as well as the number of recorded audio buffers per second.

The options panel allows the experimenter to set the audio recorder ID and the input audio tracks to be recoded.

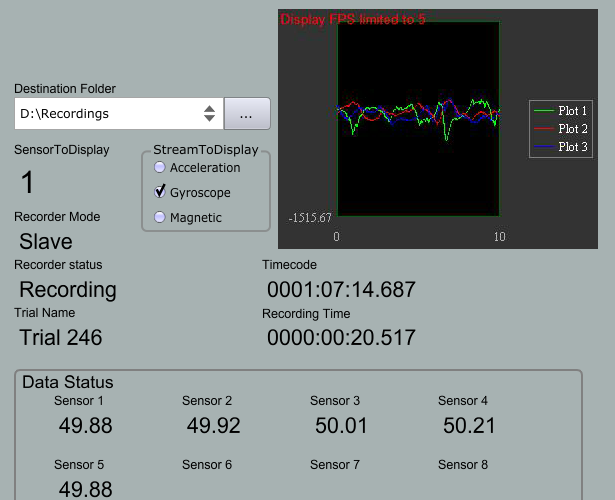

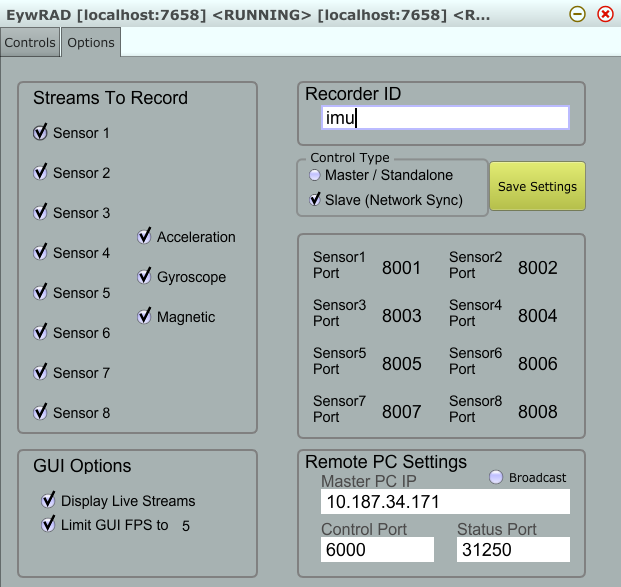

IMU recorder tool (download installer) [Warning: one or more X-OSC sensors are needed to run the tool]

The IMU recorder tool is depicted below:

The graph shows the values selected by the user (acceleration, gyroscope, or compass) for each of the four IMUs. In the lower left you may read the current framerate of each of the four sensors (49.95 samples per second). Below the graph you may see both the trial name and the reference clock.

The data is saved by the recording tool in txt files, in a format which is easy to be read by external software (e.g., Matlab), and can be of course read by EyesWeb itself for playback or analysis purposes. The options panel allows you to configure the working mode of the recorder.

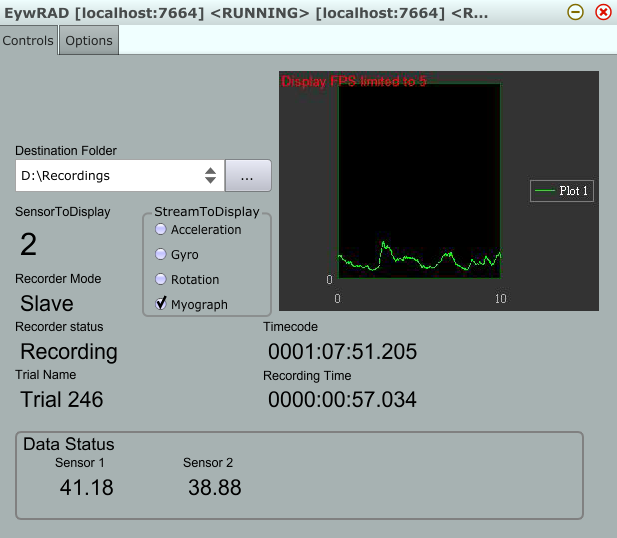

Myo recorder tool (download installer) [Warning: 2 Myo sensors are needed to run the tool]

This tool has been added during the second year of DANCE. It allows to record data coming from 2 Myo sensors at about 40 fps. The data consists of muscle contraction (1 value between 0 and 1), acceleration, gyroscope and rotation (yaw, pitch, roll).

Playback and quality extraction tools (download installer)

We provide 2 tools along with the DANCE Platform version 2:

- a data playback tool

- a quality of movement extraction tool

The installer contains sample data consisting in 2 trials recorded in DANCE during the months of January and February 2017. Both trials consists of multimodal data coming from Inertial Movement Units (accelerometers), Electromyographic sensors, video cameras and microphone. Each trial focuses on a single movement quality in the set of movement qualities identified by the UNIGE partner in collaboration with the choreographer V. Sieni. See Deliverable 2.2 for more details.

The 2 trials are:

- Trial 84: a dancer performing "fragile" movements (i.e., a sequence of non-rhythmical upper body cracks or leg releases; movements on the boundary between balance and fall, time cuts followed by a movement re-planning; the resulting movement is non-predictable, interrupted, uncertain)

- Trial 246: a dancer performing "light" movements (i.e., fluid movement together with a lack of vertical acceleration, mainly toward the floor, in particular of forearms and knees; for each vertical downward movement there is an opposite harmonic counterbalancing upward movement, simultaneous or consequent; there can be a convertion of gravity into movement on the horizontal plane using rotations and a spread of gravity on the horizontal dimension)

For a detailed description of the above and other movement qualities please refer to Deliverable 2.2.

Playback

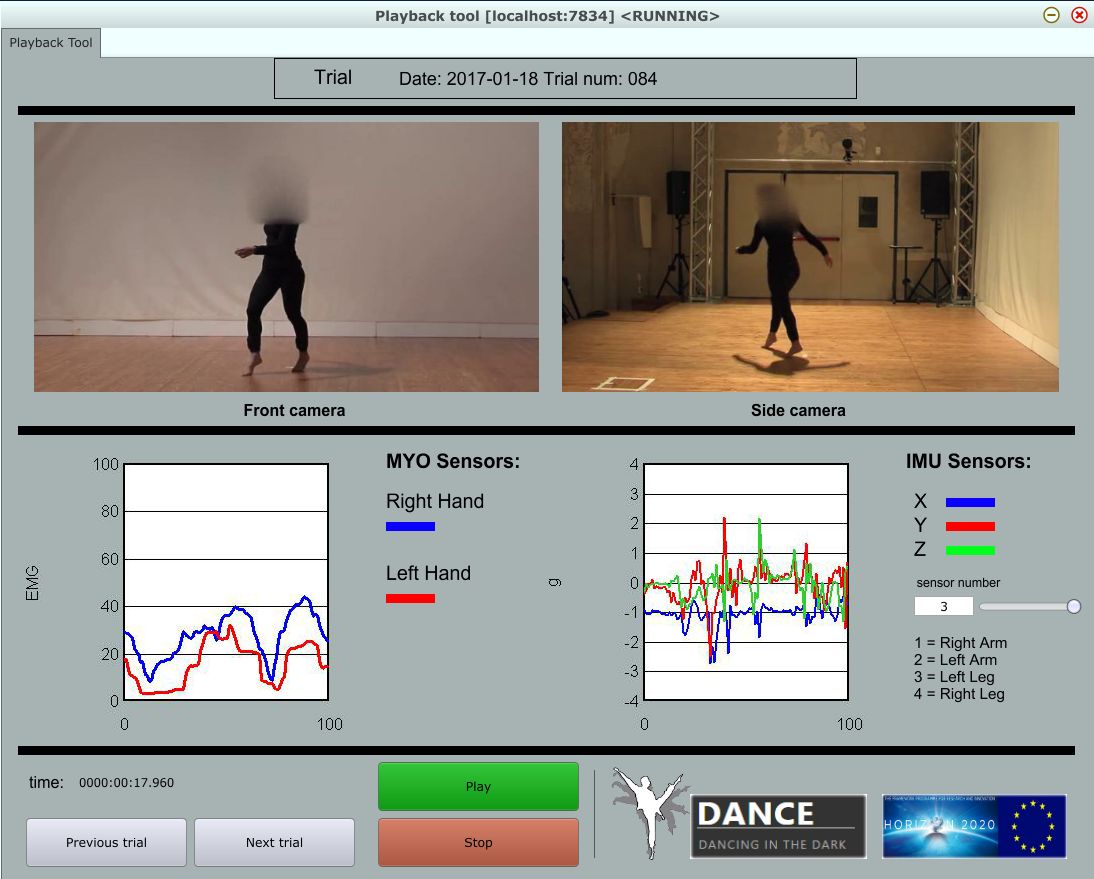

The tool window is depicted below:

The trial name is reported on top of the window. In the upper part of the window, the video streams are played back. In the lower part of the window, the EMG (on the left) and intertial data (on the right )is displayed in a time window of 100 samples. ON the bottom, there are buttons to move between the trials and start/stop the playback. The tool is automatically installed in the EyesWeb XMI folder, for example in C:\Program Files (x86)\EyesWeb XMI 5.7.0.0\DANCE_Playback_Tool.

The installer installs 2 example trials in the subfolders "2017-01-18" and "2017-02-01". If more trials are found they are automatically added to the playback tool. Once started, the tools also sends the inertial sensors data through OSC messages. This data can be received by other software modules (for example, the movement features extraction tool, see below and Deliverable 3.2) to be analyzed.

Quality extraction

In the DANCE project we aim to innovate the state of art on the automated analysis of the expressive movement. We consider movement as a communication channel allowing humans to express and perceive implicit high-level messages, such as emotional states, social bonds, and so on. That is, we are not interested in physical space occupation or movement direction per se, or in “functional” physical movements: our interest is on the implications at the expressive level. For example: a hand movement direction to the left or to the right may be irrelevant, instead the level of fluidity or impulsiveness of such movement might be relevant.

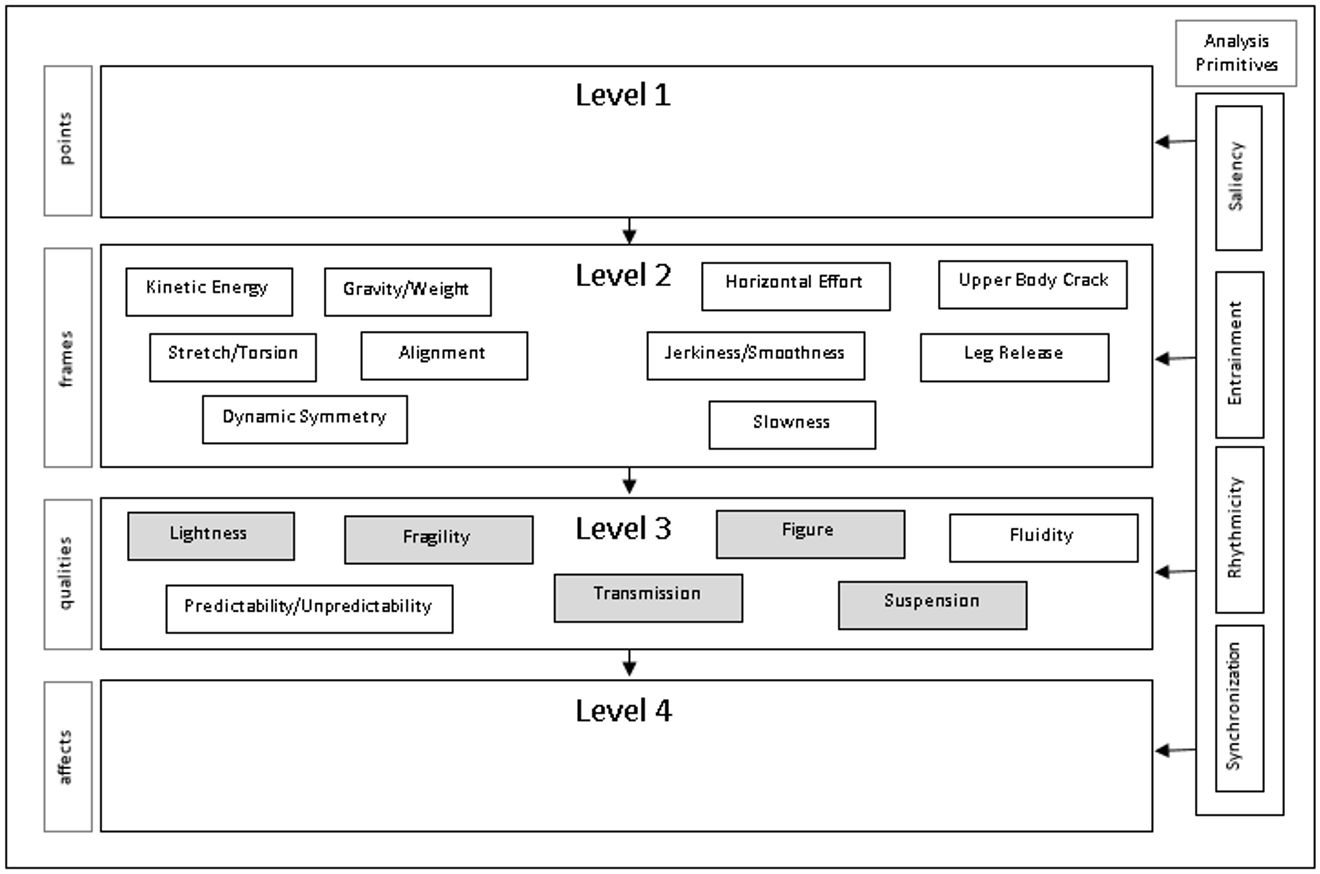

We propose a Multi-Layered Computational Framework of Qualities consisting of several layers, ranging from physical signals to high-level qualities and addresses several aspects of movement analysis with different spatial and temporal scales. The features computed at lower layers, contribute to the computation of the features at higher levels, which are usually related to more abstract concepts. In more details, within the framework, the movement quality analysis organized on four levels:

- points: physical data that can be detected by exploiting sensors in real-time (for example, position/orientation of the body planes)

- frames: physical and sensorial data, not subject to interpretation, detected uniquely starting from instantaneous physical data on the shortest time span needed for their definition and depending on the characteristics of the human sensorial channels

- qualities: perceptual features, interpretable and predictable, with a given error and correction, starting from different constellations of physical and sensory data from level 2, on the time span needed for their perception (for example, lightness is perceived as a delay on the horizontal plane or as a balance between vertical upward/downward pushes)

- affects: perceptual and contextual features, interpretable and predictable, with a given error and correction, starting from a narration of different qualities, on a large time span needed for their introjection (for example, tension created by a pressing sequence of movement cracks/releases or by a sudden break of lightness).

The first layer is devoted to capturing and preprocessing data (e.g., computing the kinematics features such as velocity, trajectories) from sensor systems (e.g., video, motion capture, audio, or wearable sensors). The second layer computes a low-level motion features at a small time scale (i.e., observable frame-by-frame) such as kinetic energy or smoothness from such data. The third one segments the flow of movements in a series of single units (or gestures) and computes a set of mid-level features, i.e., complex features that are usually extracted on groups of joints or the whole body, and require significantly longer temporal intervals to be observed (i.e., between 0.5s and 5s). Finally, the fourth layer represents even more abstract concepts such as emotional states of the displayer, social attitudes, user’s engagement in a full-body interaction.

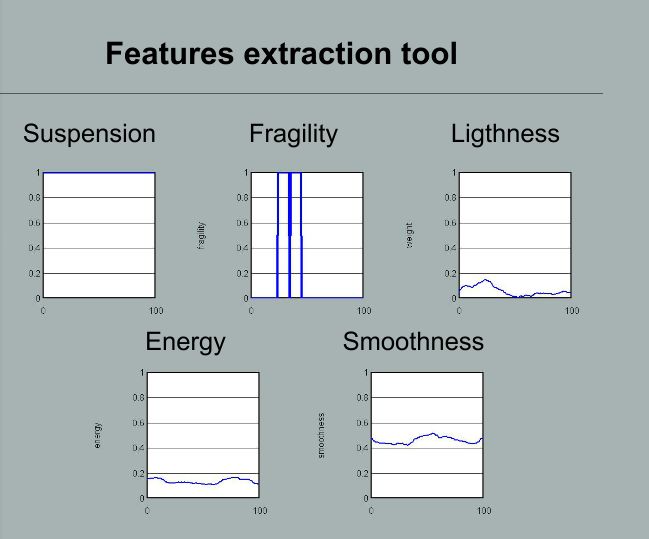

Along with the DANCE Platform version 2 we provide a quality of movement extraction tool capable of analyzing pre-recorded multimodal data and to extract the following qualities:

-

Suspension: it is a non-directional retention of energy on one of the body planes. The body or some parts of it may waving or rippling, the movement are of high predictability. We detect it by checking whether the maximum of the energy is retained over a period of time on one body plan.

- Fragility: it is a sequence of non-rhythmical upper body cracks or leg releases . Movements on the boundary between balance and fall, time cuts followed by a movement re-planning. The resulting movement is non-predictable, interrupted, and uncertain. We detect irregular sequences of upper body cracks or leg releases in 1 a second time window.

-

Lightness: this quality is related to the Laban’s Weight quality (for details, see: Rudolf Laban and Frederick C. Lawrence. 1947: Effort. Macdonald & Evans.) It is computed by extracting the Energy vertical component normalized to the overall amount of Energy in the movement.

-

Kinectic Energy of a moving (part of) body, KE = 1/2*mass*velocity

- Smoothness: it is defined as the inverse of the third derivative of position